Table of Contents

Data Storage System

Objectives

The common data shared between participating data marketplace instances may include identity information, shared semantic models, meta-information about data sets and offerings, semantic queries, sample data, smart contract templates and instances, crypto tokens and payments. No single party should fully control the data storage system and there shall be no single point of failure.

The high-level capabilities that the data storage aims to provide are:

- Decentralised Storage

- Distributed Storage

The Decentralised storage shall provide highest available security guarantees in a federated network. The Decentralised storage subsystem will be built on a secure Byzantine fault tolerant consensus based distributed ledger. Due to high security requirements, the performance and storage space of such a system may be relatively limited compared to conventional databases.

The Distributed storage shall provide a database-like subsystem that is scalable, runs on a set of distributed nodes, has a rich query interface (SQL) and can handle large amounts of data.

Additionally, the data storage system provides an API to interact with the sub-components. In case of distributed storage, a custom API is implemented in order to provide ease of access to the features supported by the storage, however, the i3-MARKET shall rely on the API of the decentralised storage provided out-of-box.

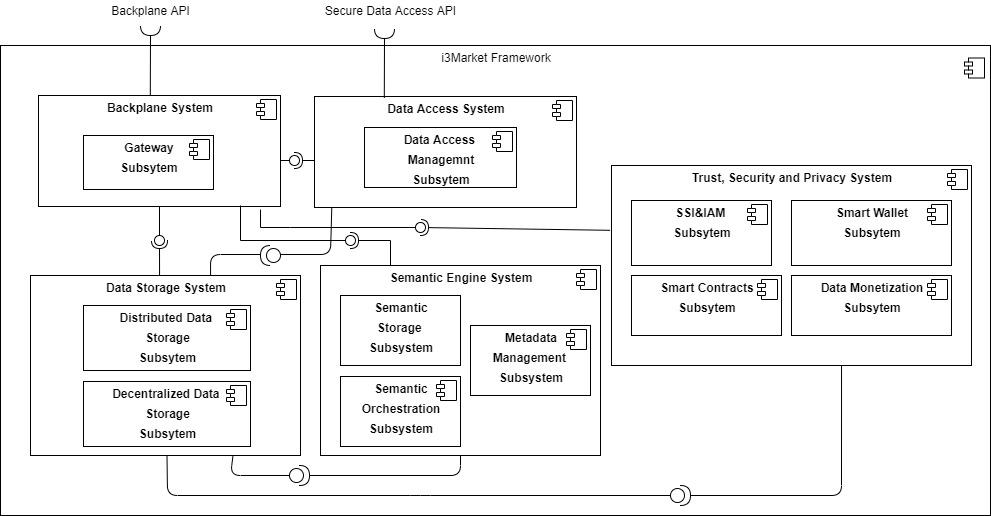

Context

Figure 1. shows the data storage system in the i3-MARKET context. All components that need to persist some global state or use global data for operation will interact with the data storage system. The data storage system needs do interface with the Semantic engine system and the Trust, security and privacy system for access management. However, the interaction between the Data storage system and the Semantic engine system is not yet finalized and is subject to change during the course of implementation.

Building blocks

The Storage system consists of two main subsystems for implementing the decentralised storage and distributed storage features, respectively. The subsystems are, at least in the initial architecture, relatively independent of other systems and also with each other.

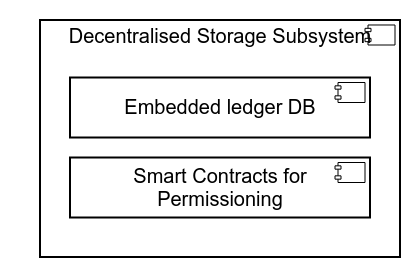

The diagram of Decentralised storage subsystem is shown in Figure 2. Decentralised Storage Subsystem. The Decentralised storage subsystem is implemented as a blockchain-based distributed ledger network. The software implementation is Hyperledger Besu in a permissioned setup using IBFT 2.0 consensus. Hyperledger Besu uses internally an embedded RocksDB instance for storing linked blocks (the journal of transaction) and world state (the ledger). Hyperledger Besu can instantiate and execute smart contracts for supporting the use cases of i3-MARKET framework.

The components depending on the decentralised storage subsystem will use Hyperledger Besu’s native JSON-RPC-based interface. A separate interface layer for accessing (or limiting access to) decentralized storage is not planned, as the nodes of the decentralised storage will already validate all transactions submitted to the ledger.

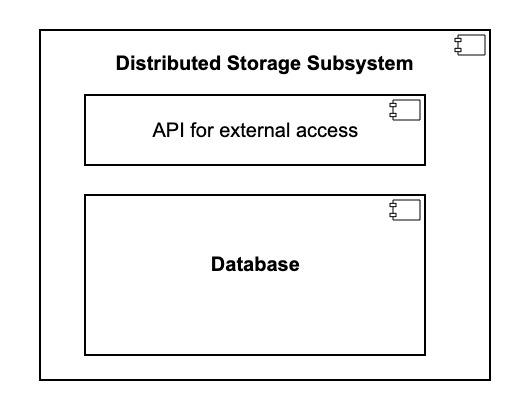

The diagram of Distributed storage subsystem is shown in Figure 3. Distributed Storage Subsystem. The subsystem consists of a distributed cluster of database nodes and an optional interface layer (not implemented for R1). The database provides an SQL interface to other i3-MARKET framework components. The software implementation database is CockroachDB that can be accessed via PostgreSQL-compatible wire protocol for which a large number of client libraries exist in different languages and platforms. Only secure access to the database will be enabled, hence all clients need to use private keys and valid certificates to access the database.

Security

Authentication and Authorization

The distributed storage component is an internal component with no external access. That is to say that it will have connections only with other trusted services within the i3-MARKET backplane. Even though this simplifies the necessary measures in terms of authentication and authorization, it is still needed to secure machine-to-machine connections between the i3-MARKET services, since they can be deployed on shared infrastructure.

Although the approach may be reconsidered in the near future, the current solution relies on providing the distributed storage behind a TLS server endpoint and requiring TLS client certificates for the different connecting services. The setup will guarantee end-to-end security between the distributed storage service and any of its client services.

The governance of the certificates has followed up to now the keep it simple approach. The Distributed Storage system will be in charge of issuing the servers’ and clients’ certificates. For release 2, the definition of the governance rules for issuing certificates within the i3-MARKET federation, will be considered.

Service Availability

The storage subsystem is a critical component of the i3-MARKET network contributing to the proper functioning of the platform. Hence, appropriate measures in the form of design, choice of technologies and deployment, have to be applied. Fortunately, the two main subsystems used in the storage solution already have strong built-in availability features that will be summarised below.

Distributed Storage

The distributed storage solution is based on a CockroachDB server cluster consisting of four nodes. All data is replicated to at least three nodes before a transaction is considered committed. Therefore, data will be available even in a catastrophic event when half of the cluster is destroyed.

In the current setup, the database cluster can continue with normal transaction processing when three nodes out of the four are available. This feature guarantees the availability of the cluster in case of, for example, regular maintenance and upgrades of server software. CockroachDB supports the addition of new nodes as needed to support the load the component is required to process.

The federated search engine index service uses the CockroachDB server cluster as its storage backend. For availability, multiple independent instances of the index can be deployed. The system is designed to have horizontal scalability with no shared state between the instances.

Decentralised Storage

The decentralised storage used in the platform is a Hyperledger BESU network which uses the IBFT 2.0 (Proof of Authority) consensus protocol. In this network, there are 4 validator nodes based on the genesis configuration stored in the corporative Nexus. In this configuration, there are 3 accounts to be used by the i3-MARKET federation.

In this scenario, different components like the auditable accounting, are capable to deploy and manage smart contracts and transactions over those accounts.

Technical Requirements

For Data Storage, the following high-level capabilities have been defined:

Decentralised Data Storage

| Name | Description |

| Embedded Ledger Database | Embedded Ledger Database is shared between operator nodes and keeps a shared state that is guaranteed to be the same at each honest node and is updated according to agreed rules. |

| Smart Contracts for Permissioning | Smart contracts are programs that are instantiated from smart contract templates and stored in the distributed ledger along with their state. |

The following table presents the user stories of the decentralised data storage capability.

| Name | Description |

| Smart contracts | As a user I want to instantiate and invoke smart contracts to use the functionality of the system. |

| Smart contract template storage | As a developer I want to store smart contract templates for instantiation by users so that I can extend the functionalities provided by the platform. |

| DID Document Status | As a user I want to register and update the status of my DID document so that I can manage my identity. |

| Consensus status management | As a user I want to register and update my consent status so that I can control the use of my data. |

| BFT Consensus | As a Data Marketplace I want the Decentralized Data Storage to use Byzantine Fault Tolerant consensus so that I can be sure a malicious party cannot compromise the data. |

| Data Security | As a stakeholder I want to have guarantees about ledger data integrity, availability, confidentiality so that I can rely on the services provided by the system. |

| Scalability of decentralized storage | As an operator of ledger databases, I want to be able to scale the storage to meet space and transaction rate demands in order to be able to run the ledger. |

Distributed Data Storage

| Name | Description |

| Embedded Ledger Database | The main database supporting consensus, sharding and permissioning. |

| Synchronization | The distributed storage database must support data synchronization between nodes. |

| Semantic Database | Semantic database is a distributed database supporting the storage of semantic data and processing semantic queries. |

| API for External Access | The API for External Access provides an interface for using the distributed storage to access and store metadata, verifiable claims, semantic data, semantic queries etc. |

The following table presents the user stories of the distributed data storage capability.

| Name | Description |

| Semantic data availability | As a Data Marketplace I want to access semantic data so that I can process semantic queries. |

| Semantic data updates | As a Data Marketplace I want to access semantic data so that I can process semantic queries. |

| Data Offering registration | As a Data Provider I want to register my data offering at a Data Marketplace so that I can sell my data discovered via the offering. |

| Verifiable claim storage | As a user I want to use the distributed storage to store verifiable claims (including consents). |

| Offer query registration | As a data consumer I want to register offer query so that the system can find the data I need. |

| Metadata update | As a data provider I want to update the metadata of my data so that the metadata is up-to-date. |

| SLA template management | As a stakeholder I want to store SLA templates so that other users could fetch the templates. |

| Scalability of distributed data storage | As a distributed data storage node operator I want to use sharding so that I can scale the storage. |

| Metadata storage | As a data provider, I want to store metadata of the dataset. |

Verifiable Database Integrity

Add

- Adds a given key-value pair to the tree.

- The key has to be in byte array format. The value can be any arbitrary string and is optional.

- If the provided key already exists in the tree, an error is returned, No duplicate keys are allowed.

- Data are combined in a new array that contains the key in hexadecimal format, the hash of the value, and an entry mark that flags this as a leaf node.

- This data is then inserted into the nodes map, where the hash of the data is, in itself, the key for this record in the map

- Finally, the tree’s root hash is recalculated.

Insert

- It is a convenient method to insert data in bulk into the tree.

- The method validates the data and calls the method add above on each individual element

Get

- Looks up for a given key in the node map.

- |Returns the hash of the corresponding value for the key, or undefined if the key is not present.

Delete

- Looks up for a given key in the node map and removes it.

- The tree’s root hash is then recalculated based on the remaining nodes.

- If no key is found an error is returned.

Create Proof

- Looks up for a given key in the nodes map and creates a new proof object.

- The proof object contains the data itself (if present), the chain of additional nodes along the tree traversal, the root hash of the tree, and a membership flag (true if the given key is present in the tree, false otherwise)

Verify Proof

- When the consumer has a proof object, it can verify if that proof matches the existing tree by calling this method. It verifies if the root hashes and node chain (in case of membership) matches with, what the CSMT class has stored internally.

- It returns true if the proof matches. If the proof returns false, it means that either the proof does not belong to this tree or that the proof was tampered with.

Sequence Diagram

Federated Query Engine Index Management

The sequence diagram in Figure 5 shows the interaction of the decentralised storage on the SEED regarding the federated query engine index management.

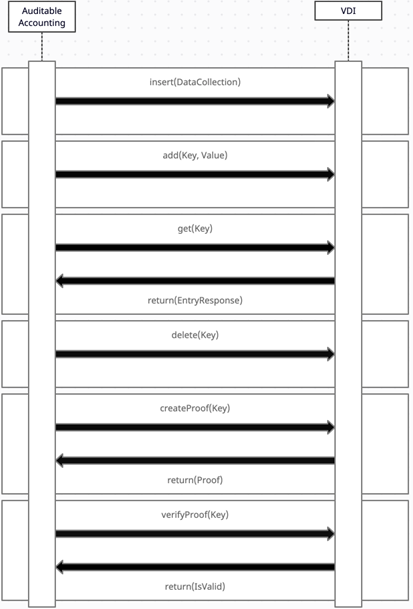

Verifiable Data Integrity

The sequence diagram on Figure 6 demonstrates the integration of Verifiable Data Integrity with the Auditable Accounting sub-system. All features are displayed on a single diagram, as there is not specific complexity within the functions.

Interfaces Description

The distributed storage sub-system does not expose a bespoke API for internal or external services. Each system within the backplane uses the storage component’s out-of-box means for connectivity. Likewise, the API provided by the decentralised storage comes out-of-box with the solution. The service can be accessed via JSON-RPC protocol offered by Hyperledger BESU. Please refer to BESU Documentation[1] for the details of the API provided by Hyperledger BESU and client libraries.

Selected Technologies

Hyperledger BESU is an open-source Ethereum client. The decision to select Hyperledger BESU to satisfy the needs for decentralised storage has been made based on the following assumptions

- Self-sovereign identity and access management (T3.1) has decided to base the reference implementation on Veramo, which specifically requires Ethereum-based blockchains.

- Auditable accounting and data monetisation require smart contract whose functionalities are easily satisfied on Ethereum-based blockchains.

Testing Strategy

Data storage components mainly comprise of commercial off-the-shelf software, therefore, CockroachDB and Hyperledger BESU are not tested explicitly, but indirectly with the testing of applications and components that rely on the storage.

Technical contributions of i3-MARKET project

Data Storage subsystem has a vital role in i3-MARKET network, providing both structured database and ledger storage solutions, hence supporting several use cases. There are several contributions that the Data Storage subsystem brings to the i3-MARKET project.

In particular, the decentralised storage component Hyperledger BESU already provides storage with strong security guarantees (integrity, availability), but due to its architecture, it is relatively inefficient and expensive for storing large or mutable data sets. Also, the query processing capabilities of Hyperledger BESU are quite limited compared to a relational database engine.

Go to the beginning of the page

Free* Open Source Software Tools for SMEs, Developers and Large Industries Building/Enhancing their Data Marketplaces.

For more detail and source code please refer below link.

Other resources of interest

Home

We are a community of experienced developers who understand that it is all about changing the perception of data economy via marketplaces support.

i3-MARKET Architecture

Take a look at the main building blocks and their hierarchy.

Data Access API

The secure Data Access API enables data providers secure registration…

Identity and Access Management

The SSI & IAM subsystem is in charge of providing both “User-centric Authentication” and…

Smart Contracts, Wallets & Accounting

i3-M Wallet is a set of technologies that facilitate the management of their identity to…

Crypto Token and Monetization System

The Data Monetization subsystem is in charge of providing “Standard Payments”…

Semantic Engine

We developed and implemented dedicated software components for Semantic Engine System as…

Specification Deployment

i3-MARKET architecture specification is based on the 4+1 architectural view model approach. One of…

Developers Quickstart SDK

Once a marketplace is part of i3-MARKET, it can issue credentials to its consumers, providers, and…

Documentation

Full Developers Documentation